Why Did Elon Musk Just Offer to Buy Control of OpenAI for $100 Billion?

OpenAI needs to fairly compensate its nonprofit board for giving up control. Musk just made that math a lot more complicated.

Wow. The Wall Street Journal just reported that, "a consortium of investors led by Elon Musk is offering $97.4 billion to buy the nonprofit that controls OpenAI."

Technically, they can't actually do that, so I'm going to assume that Musk is trying to buy all of the nonprofit's assets, which include governing control over OpenAI's for-profit, as well as all the profits above the company's profit caps.

OpenAI CEO Sam Altman already tweeted, "no thank you but we will buy twitter for $9.74 billion if you want." (Musk, for his part, replied with just the word: "Swindler.")

Even if Altman were willing, it's not clear if this bid could even go through. It can probably best be understood as an attempt to throw a wrench in OpenAI's ongoing plan to restructure fully into a for-profit company. To complete the transition, OpenAI needs to compensate its nonprofit for the fair market value of what it is giving up.

In October, The Information reported that OpenAI was planning to give the nonprofit at least 25 percent of the new company, at the time, worth $37.5 billion. But in late January, the Financial Times reported that the nonprofit might only receive around $30 billion, "but a final price is yet to be determined." That's still a lot of money, but many experts I've spoken with think it drastically undervalues what the nonprofit is giving up.

Musk has sued to block OpenAI's conversion, arguing that he would be irreparably harmed if it went through.

But while Musk's suit seems unlikely to succeed, his latest gambit might significantly drive up the price OpenAI has to pay.

(My guess is that Altman will still manage to make the restructuring happen, but every dollar given to the nonprofit is one that can't be offered to future funders, potentially dramatically limiting OpenAI's fundraising prospects. Given how quickly the company burns through cash, this could be a real problem.)

The timing is also critical. As a condition of taking $6.6 billion in new investment last October, OpenAI agreed to complete its for-profit transition within two years. If it doesn't hit that deadline, those investors can ask for their money back.

The control premium

Here's why this matters: OpenAI's nonprofit board technically controls the company and has a fiduciary duty to "humanity" rather than to investors or employees.

Pricing the relinquishing of control may be a non-starter, according to Michael Dorff, the executive director of the Lowell Milken Institute for Business, Law, and Policy at UCLA. Dorff told me in October:

If [AGI’s] going to come in five years, it could be worth almost infinite amounts of money, conceivably, right? I mean, we'll all be sitting on the beach drinking piña coladas, or we'll all be dead. I'm not sure which. So, this is very difficult, and it is likely to be litigated.

Musk's bid seems to be trying to put a floor on that price — one that is much higher than numbers that were previously thrown around.

The "control premium" — how much extra you pay to get control of a company versus just buying shares — typically ranges from 20-30%, but can go as high as 70% of the company's value. With OpenAI reportedly in talks to raise more money at up to a $300 billion valuation, that would mean the nonprofit's control could be worth anywhere from $60-210 billion.

Musk's bid makes this math problem a lot more concrete. His group is offering $97.4 billion AND promising to match any higher bids. This means the nonprofit board now has to explain why they'd accept less.

Conversion significance

In addition to transferring control from the nonprofit board to a new for-profit public benefit corporation (PBC), OpenAI is reportedly trying to remove existing caps on investor returns.

At a glance, this conversion may not seem that significant. OpenAI already behaves like a for-profit company in many ways. Almost all of its employees work for the for-profit arm, and the profit motive blew through the nonprofit’s guardrails over a year ago, when the board fired Altman only to reinstate him less than five days later in the face of an employee and investor revolt.

But OpenAI’s nonprofit board still technically governs the company and is currently set up to receive 100% of the profits once various investors’ profit caps are hit — which are as high as 100-times their initial investment, according to the Wall Street Journal. The nonprofit also gets to decide when AGI is "achieved," which gets the company out of its obligation to share its technology with Microsoft, OpenAI’s primary investor.

You may think these profit caps are a ridiculous marketing ploy that will never be reached. But Altman, OpenAI, and many of its employees think the company really could make trillions — or much, much more — in profits. And if those caps go away, it becomes even harder to imagine the profits will be shared with those who lose out from the development of AGI.

Musk's suit

A federal judge recently announced that parts of Musk's lawsuit against OpenAI will go to trial. The core of Musk's argument is that OpenAI betrayed its founding nonprofit mission by pursuing a for-profit structure. The judge called Musk's claims a "stretch," but decided to let the trial move forward anyway. “It is plausible that what Mr. Musk is saying is true. We’ll find out. He’ll sit on the stand,” she said.

Musk is a particularly unsympathetic plaintiff here, given that OpenAI has published emails showing that he knew about the for profit plans years before they took place. He has since founded a different for-profit AI company, xAI. But that's not to say that he doesn't have a point.

Encode, a nonprofit advocacy group that co-sponsored California's AI safety bill SB 1047, also joined the fray in December, filing an amicus brief in support of Musk's position arguing that OpenAI's conversion would "undermine" its mission to develop transformative technology safely and for public benefit.

(Encode receives funding from the Omidyar Network, where I am currently a Reporter in Residence.)

The brief argues that if we truly are on the cusp of artificial general intelligence (AGI), "the public has a profound interest in having that technology controlled by a public charity legally bound to prioritize safety and the public benefit rather than an organization focused on generating financial returns for a few privileged investors." The brief authors point out that OpenAI's conversion would replace its "fiduciary duty to humanity" with a legal requirement to balance public benefit against "the pecuniary interests of stockholders." Most strikingly, they argue that control over AGI development is a "priceless" charitable asset that shouldn't be sold at any price, since OpenAI itself claims AGI will be "built exactly once" and could so transform society that "money itself ceases to have value."

As Encode's brief highlights, this is about more than just corporate drama. OpenAI is explicitly trying to build AGI, which the company defines as "a highly autonomous system that outperforms humans at most economically valuable work." Altman, along with hundreds of leading AI researchers, have warned that the technology could result in human extinction.

The legal context here is important. The California and Delaware Attorneys General (AGs) each have the ability to void the for-profit conversion. Experts tell me that the key question is whether the nonprofit is fairly compensated.

Also in late December, Delaware AG Kathleen Jennings filed her own amicus brief making clear her office would scrutinize any restructuring. The brief emphasized that she has both the authority and responsibility to ensure OpenAI's transaction protects the public interest.

Under Delaware law, she writes that the AG must review whether:

The charitable purpose of OpenAI's assets would be lost or impaired

Any for-profit entity will adhere to the existing charitable purpose

OpenAI's directors are meeting their fiduciary duties

The transaction satisfies Delaware's "entire fairness" test

Most notably, the brief warned that if the AG concludes the restructuring isn't consistent with OpenAI's mission or that board members aren't fulfilling their duties, "Delaware will not hesitate to take appropriate action to protect the public interest."

That's why the nonprofit board's control was supposed to matter in the first place. It was meant to ensure that if OpenAI succeeded in building AGI, the technology would benefit humanity as a whole rather than just enriching investors. Its fiduciary duty is literally to all of humanity.

The stakes

And humanity needs all the help it can get.

The first ever International AI Safety Report — AI's closest thing to an Intergovernmental Panel on Climate Change (IPCC) report — just came out. It's not a comforting read.

The report, backed by 30 countries and authored by over 100 AI experts, outlines several concerning pathways to "loss of control" — scenarios where AI systems operate outside of meaningful human oversight. It warns that more capable AI systems are beginning to display early versions of "control-undermining capabilities" like deception, strategic planning, and "theory of mind" (the ability to model human beliefs and intentions). The authors observe that in addition to getting better at scheming against us, AI models have rapidly improved at tasks required for making biological and chemical weapons — a pairing that should give us all pause.

Perhaps most worryingly, the authors note that competitive pressures between companies and countries could lead them to accept larger risks to stay ahead, making proper safety measures less likely. While experts disagree on timing and likelihood, the report emphasizes that if extremely rapid progress occurs, it's impossible to rule out loss of control scenarios within the next several years.

And in the last nine months, OpenAI has seen an exodus of safety researchers — many of whom are warning that the world is not ready for what the company is trying to build. Late last month, another announced his departure, tweeting that, “No lab has a solution to AI alignment today. And the faster we race, the less likely that anyone finds one in time.”

The question at hand isn't just whether $97.4 billion is a fair price for the nonprofit's control. It's whether any price is high enough to justify removing the guardrails designed to keep humanity's interests ahead of investor returns at this critical moment in AI's development.

Some other recent writing of mine

Earlier in the month, I published an op-ed in the SF Standard titled "Marc Andreessen just wants you to think DeepSeek is a Sputnik moment." Here’s the accompanying thread.

I also wrote a thread criticizing a NY Times opinion piece by Zeynep Tufekci on DeepSeek that began like this:

I'm usually a fan of Zeynep's work, but this piece gets things exactly backwards. Her core argument is that DeepSeek makes "nonsense" of US efforts to contain the spread of AI, which have largely involved restricting the types of AI chips China can access.

Zeynep said she will circle back, and I look forward to her reply!

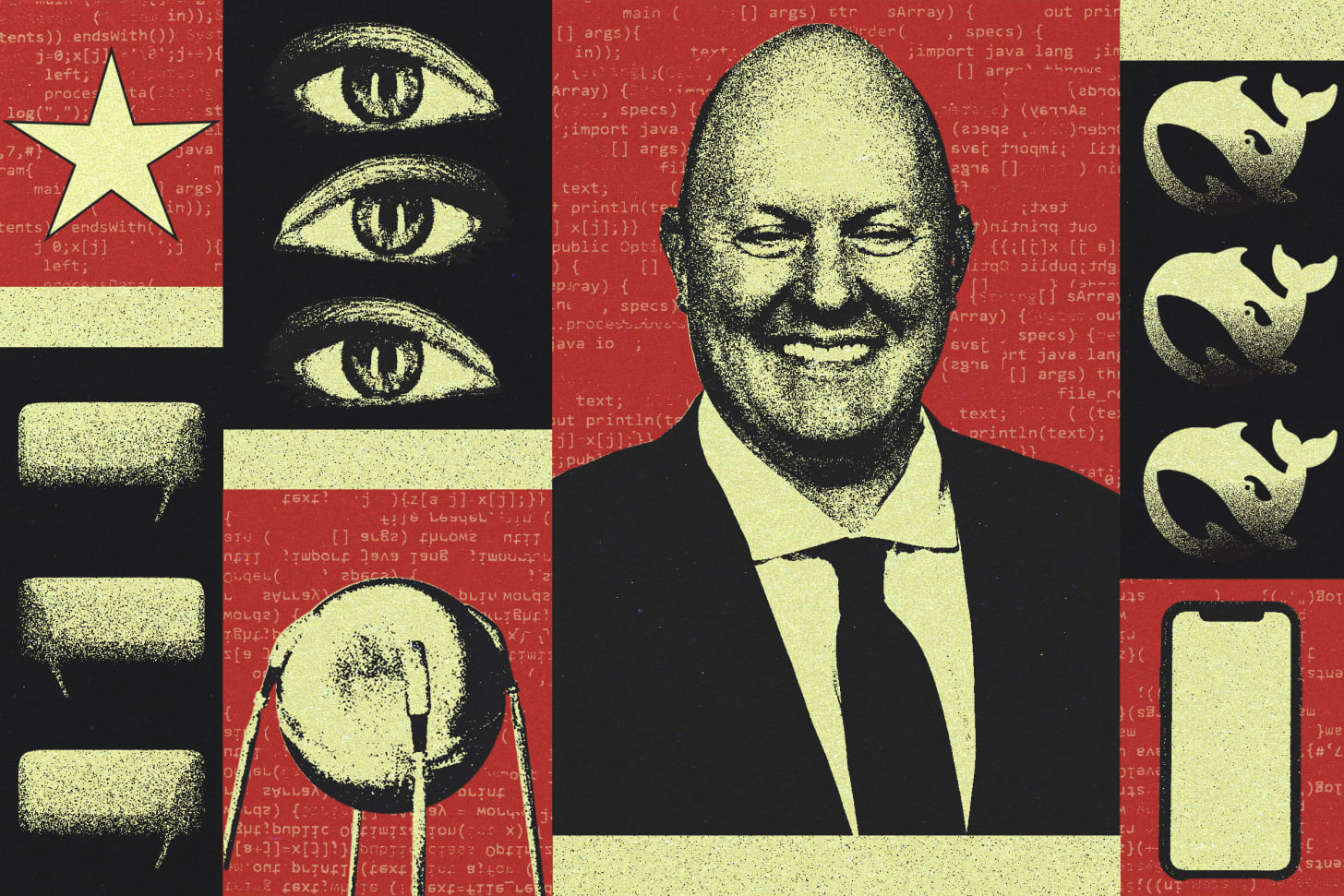

I'll probably have more to say on DeepSeek in the future, but for now, I’ll leave you with this awesome illustration Kyle Victory did for my piece:

I used to think I would suffer from brain fog for the rest of my life..."𝐛𝐮𝐭" this changed everything.... https://t.co/PDWI6twO0u

Complicated - sure. But outside bids will be needed to properly assess the fair market value of OpenAI's assets and in that regard, Elon actually may have been helpful by putting a stake in the ground with a price tag.

To the extent that OpenAI continues down the path of converting to a public benefit corporation that could well be a major improvement over its current evolution as an unwieldy combination of nonprofit-plus-for-profit arrangement, but only if OpenAI uses this transition to tidy up some important things including:

-- Embedding genuine, specific, iron-clad public-benefit mandates into the new charter

-- Properly compensating the original nonprofit for its assets (thus funding the public benefit of the nonprofit's goals)

-- Implementing stronger organizational and policy governance that, among other things, delineates conflict-of-interest policies, and establishes transparent oversight

-- Locking in an ownership stake or mechanism that enables philanthropic aims to persist over time should AI valuations skyrocket in the future

In fact, all of that is true, regardless of what form of entity OpenAI ultimately takes (even if it keeps it current form) and regardless of who ponies up the dollars. One way or another they've got to fix the structural vulnerabilities and vague boundaries that led to the controversies, legal uncertainties, board upheavals and the current condition in the first place.