Is the AI Doomsday Narrative the Product of a Big Tech Conspiracy?

A close reading of what tech titans actually say on the matter

It’s still Giving Tuesday somewhere

Before we dive in, a gentle request for money (but not for me).

This Giving Tuesday, I’m joining twelve other Substackers in raising funds for GiveDirectly, a nonprofit that sends no-strings-attached cash to people living in extreme poverty. It works — research shows cash transfers have profound, lasting impacts. I worked there for nearly two years, and I’m thrilled to support their mission again. You can read more in the Appendix section.

It’s not too late to donate! This campaign is open through the end of the month.

Back to our irregularly scheduled programming. This one’s a bit longer than usual — it’s been on my mind for over a year. If you just want to see what tech execs have said on this topic, I made a compilation here.

The AI industry’s existential angst

The leaders of the world’s most capable AI companies have done something unusual: they’ve all stated publicly that the technology they are building might end the world.

Executives at the top-three AI companies, OpenAI, Anthropic, and Google DeepMind, are all on the record saying that AI could lead to human extinction. Elon Musk, who runs his own leading AI company, xAI, has been loudly warning about AI existential risk (x-risk) for over a decade.

(The typical thinking is that the first system to rival humans across the board, often called artificial general intelligence (AGI), could recursively self-improve until it is capable or scaled up enough to be able to overpower all of humanity. Humans could effectively find themselves subject to the whims of a new dominant species that treats us the way we treat non-human animals, as a curiosity, an instrument, or an afterthought.)

This strange phenomenon has generated lots of attention and a heap of skepticism. One common argument is that these executives are cynically drumming up fears about AI-driven extinction to hype up their products and/or defer regulatory action to some future, unspecified date. Some go as far as to say that the prominence of the AI x-risk narrative is actually a result of a Big Tech conspiracy, designed to distract from the technology’s immediate harms and embarrassing shortcomings. Popularized by critics on the left, variants of this argument have recently been advanced by Ted Cruz and JD Vance.

It’s a compelling story and plays to a warranted skepticism of the people who are warning about AI’s risks, while seemingly doing everything they can to accelerate its progress.

The statements made by the executives at the top-three AI companies on x-risk are well-documented, so I won’t go through them exhaustively here. But far less attention has been paid to what other tech titans have said on the matter.

This might be because their statements undermine the narrative, but it might just be because they don’t say interesting things! Instead, the more typical Big Tech CEO says something like AI promises to transform the world, overwhelmingly for the better, but we should still be careful to manage its risks (which actually aren’t that bad).

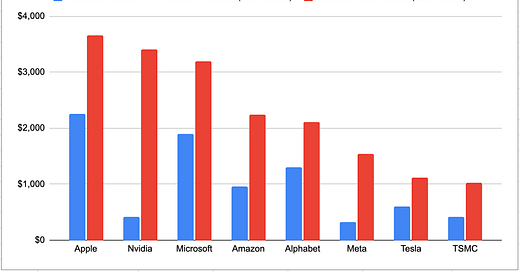

While the rise of AI has shuffled around the ranking of the most valuable tech companies, they’ve all done very well in the two years since ChatGPT was released.

At the time of this writing, nine of the world’s ten most valuable companies are tech firms, and all are worth over one trillion dollars. Nvidia, which makes the vast majority of the GPUs used to train large AI models, grew a staggering 701%, from $421 billion to $3.4 trillion. It now trades places with Apple for the title of ‘most valuable company in the world.’

All of these tech giants outperformed the S&P 500 in this period. In fact, half of all growth in the S&P 500 over the last two years is attributable to just these nine companies.

So if their interests are served by the AI x-risk narrative, we should expect tech executives to be some of its biggest proselytizers.

But in practice, Musk is the only current leader of a Big Tech firm to state explicitly that AI poses an x-risk.

Last year, Bill Gates signed onto an open letter stating that “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.” But he hasn’t held a formal role at Microsoft since 2020.

So what do they actually say in public?

The other tech titans generally dismiss the possibility of extinction entirely while arguing that the benefits of AI will significantly outweigh any risks. As in, just what you’d expect from corporate executives. If you’re interested in the extended quotes, I compiled them here.

Microsoft CEO Satya Nadella essentially waves away x-risk concerns, telling WIRED in June 2023 he's “not at all worried about AGI showing up, or showing up fast.” Instead, he frames AI as potentially “bigger than the industrial revolution,” bringing abundance to all eight billion people on Earth.

Microsoft CTO Kevin Scott, however, was one of the few Big Tech executives to sign the extinction letter.

Meta's Mark Zuckerberg has been consistently dismissive. In a 2016 interview, he was asked if fear of AI takeover was “valid” or “hysterical.” His reply: “more hysterical.”

And here’s Zuckerberg in April:

In terms of all of the concerns around the more existential risks, I don't think that anything at the level of what we or others in the field are working on in the next year is really in the ballpark of those types of risks.

This is a far more precise answer implying some level of movement toward taking the possibility more seriously — but a far cry from hyping up x-risk.

Amazon's leadership is similarly optimistic. Back in 2018, company chairman Jeff Bezos said, “The idea that there is going to be a general AI overlord that subjugates us or kills us all, I think, is not something to worry about. I think that is overhyped.” He doubled down on Lex Fridman’s podcast last December, saying, “These powerful tools are much more likely to help us and save us even than they are to, on balance, hurt us and destroy us.”

Amazon CEO, Andy Jassy, appears to stick to pure optimism. In an April 2024 letter to shareholders, he gushed about the “GenAI revolution,” writing that, “The amount of societal and business benefit from the solutions that will be possible will astound us all” — while remaining silent on extinction risks.

When Apple CEO Tim Cook was asked about AI extinction on Good Morning America in June 2023, he called for regulation but stayed mum on the actual question.

Nvidia CEO Jensen Huang, who has perhaps benefited more than anyone from the AI boom, has been remarkably cavalier about AI risk. Take this passage from a November 2023 New Yorker profile, after the author voices his dread at the seemingly imminent obsolescence of humanity:

Huang, rolling a pancake around a sausage with his fingers, dismissed my concerns. “I know how it works, so there’s nothing there,” he said. “It’s no different than how microwaves work.” I pressed Huang — an autonomous robot surely presents risks that a microwave oven does not. He responded that he has never worried about the technology, not once. “All it’s doing is processing data,” he said. “There are so many other things to worry about.”

But Huang's public comments in the profile reveal some tension. At a speaking engagement, he acknowledges concerns about “doomsday AIs” that could learn and make decisions autonomously, insisting that, “No AI should be able to learn without a human in the loop.” In response to an audience question, he predicted that AI “reasoning capability is two to three years out” — a timeline that sent murmurs through the crowd.

(It’s not clear to me if Huang is claiming that AI will never be able to learn without human involvement, or if he’s saying it should never be allowed to happen. The former is at odds with the position of the most cited researchers in the field. The latter will not happen without regulation, as the competitive pressures will prompt us to cede more and more decision-making powers to autonomous systems.)

I couldn’t find any public statements on this topic from CC Wei, chief executive of TSMC, which manufactures almost all the semiconductors used in advanced AI development.

Google is a bit more complicated. In an interview largely buried in the paywalled “Overtime” section of an April 2023 episode of 60 Minutes, CBS News’ Scott Pelley speaks with Google CEO Sundar Pichai, who says:

I’ve always thought of AI as the most profound technology humanity is working on. More profound than fire or electricity or anything that we’ve done in the past… We are developing technology which, for sure, one day, will be far more capable than anything we’ve ever seen before.

Later on, there’s this notable exchange:

Pelley: What are the downsides?

Pichai: I mean the downside is, at some point, that humanity loses control of the technology it’s developing

Pelley (voice over): Control, when it comes to disinformation and generating fake images.

I’m not sure if Pelley is just paraphrasing what Pichai says next, or speculating as to what he meant, but it’s hard to reconcile the claim that AI will inevitably be far more capable than any past technology, we might lose control of it, and that the biggest downside will be disinformation.

(Props to Jason Aten for finding and writing up this interview.)

Former Google CEO Eric Schmidt has publicly expressed concern that AI poses an “existential risk,” which he defined as “many, many, many, many people harmed or killed,” at a Wall Street Journal event in May 2023. However, he has consistently emphasized “misuse” risk, i.e. a bad actor uses AI to cause harm, rather than “misalignment” risk, i.e. humanity loses control of a powerful AI.

Where you come down on the relative risk of misuse vs. misalignment tends to have significant implications on where you fall on the spectrum from ‘race China to build AGI first’ on one side to ‘shut it all down because AGI will kill everyone by default’ on the other (this continuum likely warrants its own future post).

Schmidt advocates for racing China to build AGI, arguing at Harvard in November that even a few months' lead could provide “a very, very profound advantage.” He also claims that the US was now falling behind China in the AI race — an abrupt departure from his assessment to Bloomberg only six months ago that the US was “two or three years” ahead of China on AI.

Despite acknowledging the need for some international guardrails, like restrictions on autonomous weapons, Schmidt tells the Harvard audience that he remains fundamentally optimistic about AI. And in a November essay in the Economist, he writes that the technology could reset the “baseline of human wealth and well-being,” concluding that “Just that possibility itself demands that we pursue it.”

This seemingly contradictory position — emphasizing AI's destructive potential while advocating for its accelerated development — may be both a product and a consequence of Schmidt’s deep ties to the national security establishment, like his past leadership of both the National Security Commission on Artificial Intelligence and the Pentagon's Defense Innovation Board.

Google co-founder, Sergey Brin, has not appeared to make any public statements on AI x-risk.

What do they (reportedly) say in private?

It’s possible that these deca-billionaires are singing a different tune in private, but privacy doesn’t mean as much when you’re that famous.

According to multiple independent sources, Google’s other co-founder, Larry Page, thinks AI could kill us all — he just doesn’t seem to care. In private settings, he’s reportedly dismissed efforts to prevent AI-driven extinction as “speciesist” and “sentimental nonsense,” viewing superintelligent AI as “just the next step in evolution.”

Zuckerberg has long had strong feelings about the idea that AI might drive humanity extinct. He wants people to stop talking about it. At least that’s what he asked of Elon Musk when they first met back in 2014, according to Cade Metz’s book Genius Makers. Zuckerberg invited Musk with the intention of getting him to tone it down. He even invited backup from some prominent Facebook researchers, including Yann LeCun (who has himself become one of loudest promoters of the idea that AI industrialists are fear-mongering to capture regulators). Metz writes that during his meeting with Musk, Zuckerberg “didn’t want lawmakers and policy makers getting the impression that companies like Facebook would do the world harm with their sudden push into artificial intelligence.”

This pattern is clear — the executives at the biggest tech companies publicly downplay or dismiss existential risks while emphasizing AI's benefits.

If hyping up x-risk actually serves Big Tech's interests, shouldn’t we expect to see more titans joining that chorus?

And what of the true believers?

You can even see a transformation underway in the founders who kicked off the generative AI revolution.

OpenAI CEO Sam Altman has a long track record of frankly (sometimes glibly) discussing x-risk from AI. However, as OpenAI has gotten closer to profitability and Altman closer to power, the CEO has actually begun downplaying the idea of AI-driven extinction.

In February 2015, nearly a full year before OpenAI was formally founded, Altman opened a personal blog post with this stark sentence: “Development of superhuman machine intelligence (SMI) is probably the greatest threat to the continued existence of humanity.” In June 2015, as he was co-founding OpenAI, Altman said publicly, “AI will probably, most likely lead to the end of the world, but in the meantime, there’ll be great companies.”

However, in an interview just before he was briefly dethroned in November 2023, Altman said, “I actually don’t think we’re all going to go extinct. I think it’s going to be great. I think we’re heading towards the best world ever.”

And in a September blog post called “The Intelligence Age,” Altman doesn’t mention extinction at all. The only downside he specifies is the “significant change in labor markets (good and bad)” to come, but even here, his outlook is rosy:

most jobs will change more slowly than most people think, and I have no fear that we’ll run out of things to do (even if they don’t look like “real jobs” to us today).

(I documented Altman’s journey on this topic in greater detail in my Jacobin cover story here.)

Last summer, The New York Times called Anthropic “the White-Hot Center of AI Doomerism,” but even its CEO, Dario Amodei, has presented a sunnier perspective as his company has grown. In October, he published a 14,000 word essay called “Machines of Loving Grace” that outlined how incredible the world could be if things go well with AI, partly in a conscious attempt to respond to his reputation as a “doomer.”

Of everyone mentioned so far, excluding Musk, I think Amodei is the most genuinely worried about the risks from AI. So it’s notable that even he is changing his public emphasis.

Under pressures

I think what’s happening here is pretty straightforward. The people who are leading the charge on developing general AI systems have long histories of caring a lot about the technology. They thought it would be a huge deal, with nearly boundless upside and downside. As their companies grew, they started dealing with new pressures: investors, lawyers, regulators, and higher-ups (at least in the case of DeepMind once it was acquired by Google).

Of these pressures, the fear of regulatory action might be the most significant. Most of the AI industry does not want to be regulated, no matter what they may have said to the contrary. Google, OpenAI, and Meta all came out hard against California Senate Bill 1047, the first real attempt to implement binding AI safety guardrails in the US. Few of their arguments against the bill hold up under the slightest scrutiny, but governor Gavin Newsom caved to political and industry pressure to kill it anyway.

If AI companies ever needed to rely on doomsday fears to lure investors and engineers, they definitely don’t anymore. As governments slowly turn their attention to the industry, most executives seem far more interested in maintaining the status quo of self-regulation than in promoting the idea that their products pose a risk to the whole world.

It’s also possible, of course, that their views actually updated in light of how AI was developing. (Though I think it would be a grave mistake to conclude from the fact that ChatGPT mostly complies with developer and user intent that we have any reliable way of controlling an actual machine superintelligence. The top researchers in the field say we don’t.)

Should we just ignore CEOs?

Some will see this exhaustive cataloguing as a big waste of time, arguing that these executives are so conflicted that their pronouncements contain no real information. Instead of reading tainted tea leaves, we should just ignore them and focus on what is said by others who have special insight into the technology, but no vested interest in playing its risks up or down. (This might include people like Yoshua Bengio, a deep learning pioneer who resisted the siren song of Big Tech and, last year, became one of the most prominent voices to warn that AI poses an extinction risk.)

I think this is a good instinct, but you can still learn things from what these executives do and don’t say, as well as how their statements have changed over time. And I’d wager that the things said a decade ago by the founders of the leading AI companies hew pretty closely to their actual views at the time.

About a year ago, I asked an employee at one of these companies whether the major AI founders took x-risk seriously, and they wrote back:

I don’t actually think the people talking about x risk are that motivated by hype or regulatory capture. I’ve discussed this at length with basically everyone relevant in very private settings. Usually it’s quite a genuine concern.

This matches my experience reporting on and interacting with the AI industry. Some of the people most worried about AI are conflicted, but many of them aren’t. And conflicts alone aren’t discrediting. Arguments and evidence should be evaluated on their merits.

And while AI doomsday narratives may not be the result of a Big Tech conspiracy, the real story is far more unsettling: some of the people closest to the technology are genuinely terrified of what they're building, while others can't wait to build it faster.

Appendix: More info on GiveDirectly

This fundraiser is near and dear to me because I spent nearly two years working at GiveDirectly! Unconditional cash transfers work really well — even better than expected. GiveWell, the rigorous charity evaluator, recently estimated that cash transfers were 3-4 times more effective than they previously thought thanks to positive spillover effects (in short, because a dollar spent is a dollar earned).

I’m particularly excited about the policy implications of GiveDirectly’s work and research. In addition to helping people who really need it, the nonprofit has been part of a larger effort to benchmark social policies against unconditional cash, which, in my view, is a welcome trend in development economics and public policy. (It might be even better to treat cash as the default.)

Great piece, thank you :) I think some of the confusion and side-switching from tech CEOs on AI x-risks might also be explained by another option - their different market strategies. From a great paper on open source AI entitled: "OPEN (FOR BUSINESS): BIG TECH, CONCENTRATED POWER, AND THE POLITICAL ECONOMY OF OPEN AI" (very recommended read in its entirety)

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4543807

p. 17, quote - It’s worth noting here that we’ve described two instances of lobbying through two entities tightly tied to Microsoft, seemingly in different directions. GitHub’s argument, outlined in section one [that the open source AI is good and should not be regulated], is self interested because they (and Microsoft) rely on open source development, both as a business model for the GitHub platform and as a source of training data for profitable systems like CoPilot. This makes sense for them, while OpenAI is arguing primarily that models “above a certain threshold" should not be open — a threshold that they effectively set due to resource monopolies Microsoft benefits from. So, open source exceptions are good for them. Arguing that open sourcing their powerful models is dangerous also benefits OpenAI — this claim both reasserts the power of their models and allows them to conflate resource concentration with cutting edge scientific development.

Could it be we've seen similar dynamics of playing to both sides with AI x-risk? Even if, you're right that "If AI companies ever needed to rely on doomsday fears to lure investors and engineers, they definitely don’t anymore."

Satya Nadella in the interview from February 2023 https://www.youtube.com/watch?v=YXxiCwFT9Ms 16:55

Interviewer: And then I have to ask and I sound a little bit silly. I feel a little bit silly even contemplating it, but some very smart people ranging from Stephen Hawkins [sic!] to Elon Musk to Sam Altman, who I just saw in the hallway here, your partner at OpenAI, have raised the specter of AI somehow going wrong in a way that is lights out for humanity. You're nodding your head. You've heard this too.

Nadella: Yeah.

Interviewer: Is that a real concern? And if it is, what are we doing?

Nadella: Look, I mean, runaway AI, if it happens, it's a real problem. And so the way to sort of deal with that is to make sure it never runs away. And so that's why I look at it and say let's start with-- before we even talk about alignment and safety and all of these things that one should do with AI, let's talk about the context in which AI is used. I think about the first set of categories in which we should use these powerful models are where humans unambiguously, unquestionably are in charge. And so as long as we sort of start there, characterize these models, make these models more safe and, over time, much more explainable, then we can think about other forms of usage, but let's not have it run away.